Key insights from our webinar on content scrapers and AI search optimisation.

Context

On 3rd June 2025, our Managing Director, David Eccles hosted a webinar for our clients to tackle the pressing question facing every organisation today: should you let AI bots access your website content?

The session brought together people that we work with from museums, galleries, universities, charities, and policy organisations. Here we’re sharing the key takeaways that will help you make this critical decision.

The stakes are higher than you think

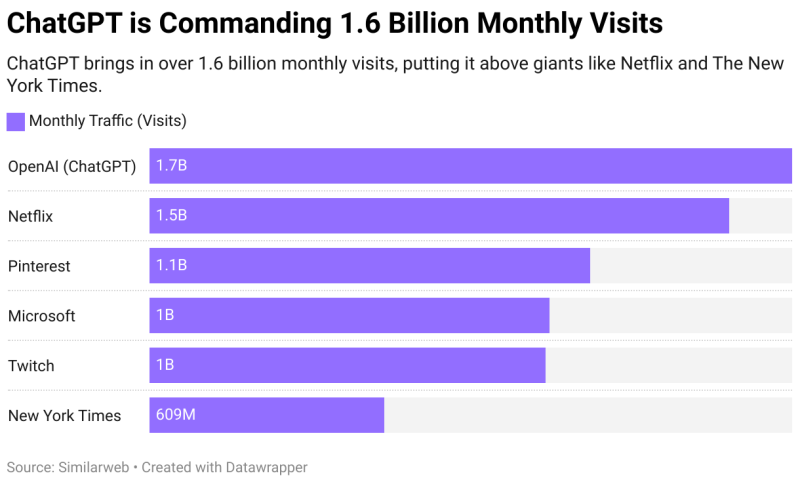

AI tools have evolved from novelty to necessity, with AI tools user growth rates so high that they’re rapidly becoming some of the most used sites in the world. Chat GPT receives over 1.6 billion monthly visits, making it more popular than Netflix, Pinterest or the New York Times. These platforms now serve as gatekeepers between your organisation and your audiences, especially younger generations who treat ChatGPT like previous generations used Google.

The impact goes far beyond casual searches. Professionals across government, policy, and research sectors increasingly use AI tools to synthesise reports and create briefings in minutes rather than weeks. When David tested this by asking ChatGPT and Perplexity to research childhood poverty in the UK, both tools properly cited organisations like the Joseph Rowntree Foundation. The implication is clear: if your content isn't accessible to these tools, your voice gets excluded from important conversations and research.

The case for allowing AI bot access

The primary argument for allowing access centres on reach and authority. Cultural organisations gain discovery opportunities for their collections and exhibitions while providing enhanced accessibility through AI-powered explanations. Universities become visible in the complex course comparison searches that prospective students increasingly rely on. Policy organisations can influence AI-generated research reports and establish authority beyond their usual branded search terms.

Perhaps most importantly, you maintain control over your narrative. When AI tools can access your authoritative, up-to-date content directly, they're less likely to rely on secondary sources that might misrepresent your work. As Daniel from the Joseph Rowntree Foundation put it during the session: "We might lose some traffic, but if we get referenced as a trusted, cited source of information online, that's supporting our vision and mission."

The risks of allowing access

We’d generally recommend allowing AI bots to crawl your site, for the reasons listed above. But there are legitimate concerns, and you need to make the call based on the needs of your organisation.

Content can be misrepresented when synthesised by AI tools, and there are genuine copyright issues, particularly for organisations with touring exhibitions or licensed content they don't own. You'll likely see reduced direct website traffic as people get answers without clicking through to your site, and you lose contextual control over how your information appears.

There's also the fundamental shift in how success is measured. Traditional metrics focused on driving traffic to your website, but the new paradigm asks whether you're effectively pushing authoritative content into AI models where people increasingly consume information. We can track this using tools like SE Ranking, but it’s not as exact as tracking web visits.

The risks of blocking access

Blocking AI bots brings its own significant risks. You risk invisibility in AI-powered discovery, which is increasingly how people find information about organisations like yours. This creates a competitive disadvantage against organisations that allow access and establish authority in AI responses.

Crucially, blocking doesn't prevent AI tools from discussing your organisation. They'll simply rely on secondary sources, potentially outdated or inaccurate information from third-party sites. David demonstrated this with examples where unofficial sites provided incorrect information about museum exhibitions that then appeared in AI summaries alongside official sources.

Making the decision work for you

The choice isn't binary. You can selectively allow certain platforms while blocking others, restrict access to sensitive areas like staff directories, or create optimised content specifically for AI consumption through LLM.txt files.

The key is establishing authority. Before making any decision, test how AI tools currently represent your organisation. Ask ChatGPT or Perplexity about your sector and see if you appear, whether the information is accurate, and if your expertise is recognised. This baseline will inform your strategy.

Our recommendation

Unless you have specific reasons to block AI bots, allow access while focusing on providing accurate, authoritative content. The opportunities for reach and influence typically outweigh the risks, especially for organisations whose mission involves education, research, or public engagement.

The landscape has shifted dramatically since ChatGPT launched in November 2022. What began as a defensive reaction from many organisations has evolved into recognition that AI tools are now essential infrastructure for information discovery.

The bottom line: AI tools will discuss your organisation whether you allow access or not. The question is whether that discussion will be based on your authoritative content or someone else's interpretation of your work.

Watch the recording of our AI Bots – Block or not? webinar.

Want to develop your organisation's AI strategy? Get in touch to discuss your specific situation and develop a tailored approach.